44 soft labels deep learning

Deep Learning from Noisy Image Labels with Quality Embedding Specially, it consists of two important layers: (1) the contrastive layer estimates the quality variable in the embedding space to reduce noise effect; (2) the additive layer aggregates prior predictions and noisy labels as posterior to train the classifier. (PDF) Deep learning with noisy labels: Exploring techniques and ... In this paper, we first review the state-of-the-art in handling label noise in deep learning. Then, we review studies that have dealt with label noise in deep learning for medical image analysis....

[2007.05836] Meta Soft Label Generation for Noisy Labels The existence of noisy labels in the dataset causes significant performance degradation for deep neural networks (DNNs). To address this problem, we propose a Meta Soft Label Generation algorithm called MSLG, which can jointly generate soft labels using meta-learning techniques and learn DNN parameters in an end-to-end fashion.

Soft labels deep learning

Understanding Deep Learning on Controlled Noisy Labels Posted by Lu Jiang, Senior Research Scientist and Weilong Yang, Senior Staff Software Engineer, Google Research. The success of deep neural networks depends on access to high-quality labeled training data, as the presence of label errors (label noise) in training data can greatly reduce the accuracy of models on clean test data. Unfortunately, large training datasets almost always contain ... Deep Learning: A Comprehensive Overview on Techniques ... Aug 18, 2021 · Deep learning (DL), a branch of machine learning (ML) and artificial intelligence (AI) is nowadays considered as a core technology of today’s Fourth Industrial Revolution (4IR or Industry 4.0). Due to its learning capabilities from data, DL technology originated from artificial neural network (ANN), has become a hot topic in the context of computing, and is widely applied in various ... Label-Free Quantification You Can Count On: A Deep Learning Experiment Although it shows excellent correspondence between the two methods, the total number of objects detected with deep learning was around 3% higher. Figure 2: Nuclei detected using fluorescence (left), the corresponding brightfield image (middle), and object shape predicted by deep learning technology (right).

Soft labels deep learning. Technical Note: Deep learning based MRAC using rapid ultrashort echo ... The tissue labels estimated by deep learning are refined by a conditional random field based correction. The soft tissue labels are further separated into fat and water components using the two-point Dixon method. ... Result: Dice coefficients for air (within the head), soft tissue, and bone labels were 0.76 ± 0.03, 0.96 ± 0.006, and 0.88 ± ... An Introduction to Confident Learning: Finding and Learning with Label ... I recommend mapping the labels to 0, 1, 2. Then after training, when you predict, you can type classifier.predict_proba () and it will give you the probabilities for each class. So an example with 50% probability of class label 1 and 50% probability of class label 2, would give you output [0, 0.5, 0.5]. What is Label Smoothing? - Towards Data Science Label smoothing is used when the loss function is cross entropy, and the model applies the softmax function to the penultimate layer's logit vectors z to compute its output probabilities p. In this setting, the gradient of the cross entropy loss function with respect to the logits is simply ∇CE = p - y = softmax (z) - y Label smoothing with Keras, TensorFlow, and Deep Learning This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability

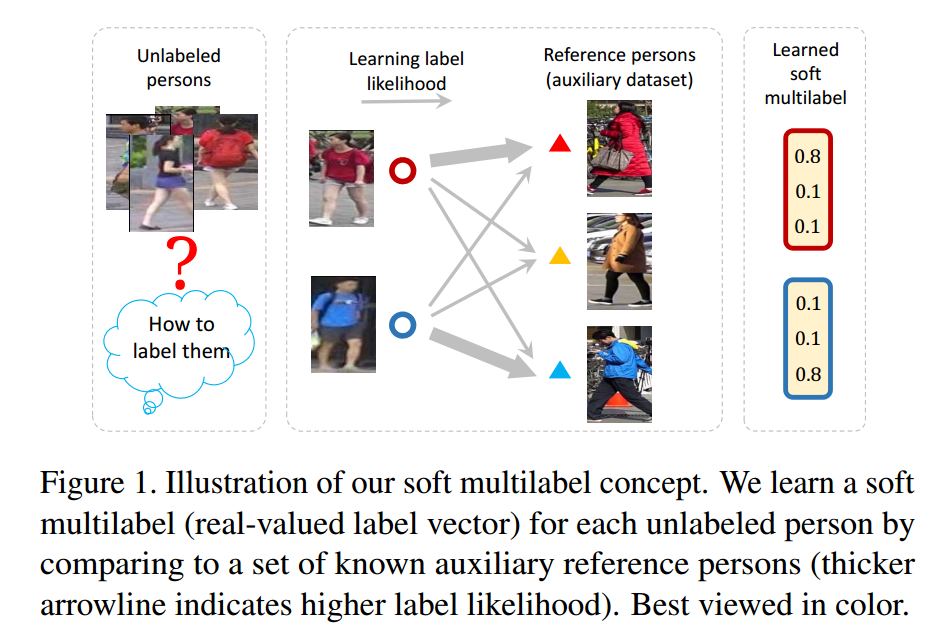

MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels - DeepAI Soft-labels are generated from extracted features of data instances, and the mapping function is learned by a single layer perceptron (SLP) network, which is called MetaLabelNet. Following, base classifier is trained by using these generated soft-labels. These iterations are repeated for each batch of training data. A review of deep learning methods for semantic segmentation ... May 01, 2021 · Semantic segmentation of remote sensing imagery has been employed in many applications and is a key research topic for decades. With the success of deep learning methods in the field of computer vision, researchers have made a great effort to transfer their superior performance to the field of remote sensing image analysis. subeeshvasu/Awesome-Learning-with-Label-Noise - GitHub 2017-Arxiv - Deep Learning is Robust to Massive Label Noise. [Paper] 2017-Arxiv - Fidelity-weighted learning. [Paper] 2017 - Self-Error-Correcting Convolutional Neural Network for Learning with Noisy Labels. [Paper] 2017-Arxiv - Learning with confident examples: Rank pruning for robust classification with noisy labels. [Paper] [Code] PDF Unsupervised Person Re-Identification by Soft Multilabel Learning in the absence of pairwise labels across disjoint camera views. To overcome this problem, we propose a deep model for the soft multilabel learning for unsupervised RE-ID. The idea is to learn a soft multilabel (real-valued label likeli-hood vector) for each unlabeled person by comparing the unlabeled person with a set of known reference persons ...

Labels · ruasoft/Deep-Learning-MNIST-TF2-Keras · GitHub Deep Learning and MNIST. Contribute to ruasoft/Deep-Learning-MNIST-TF2-Keras development by creating an account on GitHub. Meta Soft Label Generation for Noisy Labels - arxiv-vanity.com The existence of noisy labels in the dataset causes significant performance degradation for deep neural networks (DNNs). To address this problem, we propose a Meta Soft Label Generation algorithm called MSLG, which can jointly generate soft labels using meta-learning techniques and learn DNN parameters in an end-to-end fashion. Our approach adapts the meta-learning paradigm to estimate optimal ... Unsupervised deep hashing through learning soft pseudo label for remote ... We design a deep auto-encoder network SPLNet, which can automatically learn soft pseudo-labels and generate a local semantic similarity matrix. The soft pseudo-labels represent the global similarity between inter-cluster RS images, and the local semantic similarity matrix describes the local proximity between intra-cluster RS images. 3. Robust Training of Deep Neural Networks with Noisy Labels by Graph ... 2.1 Deep Neural Networks with Noisy Labels. Several deep learning-based methods have been proposed to solve the image classification with the noisy labels. In addition to co-teaching [] and pseudo-labeling methods [11, 13, 18], some methods estimate the transition matrix of the noise to train a robust model.Goldberger et al. proposed a method to model the noise transition matrix by adding a ...

How to make use of "soft" labels in binary classification - Quora If you choose soft prediction, the output of the model would look like: [0.9, 0.1]; and the output from hard prediction would be "0" (the index) or "fraud". The soft prediction gives you more information about the model's confidence in prediction. The higher the value for the predicted class, the more confident and accurate (in general) the

Soft-Label Guided Semi-Supervised Learning for Bi-Ventricle ... Deep convolutional neural networks have been applied to medical image segmentation tasks successfully in recent years by taking advantage of a large amount of training data with golden standard annotations. However, it is difficult and expensive to obtain good-quality annotations in practice. This work aims to propose a novel semi-supervised learning framework to improve the ventricle ...

PDF MixNN: Combating Noisy Labels in Deep Learning by Mixing with Nearest ... During a "early learning" phase, deep neural networks were ob-served to fit the clean samples before memorizing the mislabeled samples. In this paper, we dig deeper into the representation ... [24] iteratively updates the labels with soft or hard pseudo-labels. PENCIL [26] refines the relabel procedure without using prior information ...

Label Smoothing: An ingredient of higher model accuracy Your labels would be 0 — cat, 1 — not cat. Now, say you label_smoothing = 0.2 Using the equation above, we get: new_onehot_labels = [0 1] * (1 — 0.2) + 0.2 / 2 = [0 1]* (0.8) + 0.1 new_onehot_labels = [0.9 0.1] These are soft labels, instead of hard labels, that is 0 and 1.

Learning to Purify Noisy Labels via Meta Soft Label Corrector By viewing the label correction procedure as a meta-process and using a meta-learner to automatically correct labels, we could adaptively obtain rectified soft labels iteratively according to current training problems without manually preset hyper-parameters.

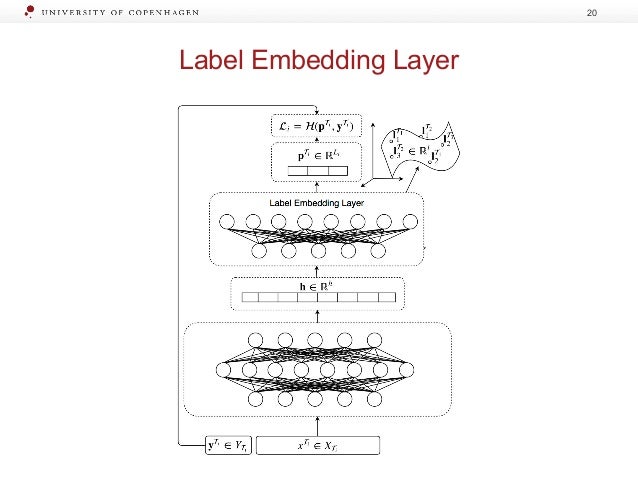

Learning Soft Labels via Meta Learning Learning Soft Labels via Meta Learning View publication Copy Bibtex One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization.

Data Labeling Software: Best Tools For Data Labeling in 2021 Labelbox. LabelBox is a popular data labeling tool that offers an iterate workflow process for accurate data labeling and creating optimized datasets. The platform interface provides a collaborative environment for machine learning teams, so that they can communicate and devise datasets easily and efficiently.

Learning from Noisy Labels with Deep Neural Networks: A Survey Deep learning has achieved remarkable success in numerous domains with help from large amounts of big data. However, the quality of data labels is a concern because of the lack of high-quality ...

Knowledge distillation in deep learning and its applications - PMC Soft labels refers to the output of the teacher model. In case of classification tasks, the soft labels represent the probability distribution among the classes for an input sample. The second category, on the other hand, considers works that distill knowledge from other parts of the teacher model, optionally including the soft labels.

What is the definition of "soft label" and "hard label"? A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels.

How To Label Data For Semantic Segmentation Deep Learning Models? Labeling the data for computer vision is challenging, as there are multiple types of techniques used to train the algorithms that can learn from data sets and predict the results. Image annotation...

Validation of Soft Labels in Developing Deep Learning Algorithms for ... Validation of Soft Labels in Developing Deep Learning Algorithms for Detecting Lesions of Myopic Maculopathy From Optical Coherence Tomographic Images The predicted possibilities from the models trained by soft labels were close to the results made by myopia specialists.

Schematic illustration of the deep learning workflow and CED network.... | Download Scientific ...

Label-Free Quantification You Can Count On: A Deep Learning Experiment Although it shows excellent correspondence between the two methods, the total number of objects detected with deep learning was around 3% higher. Figure 2: Nuclei detected using fluorescence (left), the corresponding brightfield image (middle), and object shape predicted by deep learning technology (right).

Deep Learning: A Comprehensive Overview on Techniques ... Aug 18, 2021 · Deep learning (DL), a branch of machine learning (ML) and artificial intelligence (AI) is nowadays considered as a core technology of today’s Fourth Industrial Revolution (4IR or Industry 4.0). Due to its learning capabilities from data, DL technology originated from artificial neural network (ANN), has become a hot topic in the context of computing, and is widely applied in various ...

Understanding Deep Learning on Controlled Noisy Labels Posted by Lu Jiang, Senior Research Scientist and Weilong Yang, Senior Staff Software Engineer, Google Research. The success of deep neural networks depends on access to high-quality labeled training data, as the presence of label errors (label noise) in training data can greatly reduce the accuracy of models on clean test data. Unfortunately, large training datasets almost always contain ...

Post a Comment for "44 soft labels deep learning"